Project Page on Human-Robot Collaboration

Hong (Herbert) Cai and Yasamin Mostofi, UCSB

|

|

|

| Predicting human visual performance for optimal query | Co-optimization of sensing tour and human collaboration | Optimal path planning for robotic field sensing with human assistance |

Robots are becoming more capable of accomplishing complicated tasks these days. There, however, still exist many tasks that robots cannot autonomously perform to a satisfactory level. As such, it is of great importance to properly include humans in certain robotic operations since robots can benefit tremendously from asking humans for help. However, human performance may also be far from perfect depending on the given task.

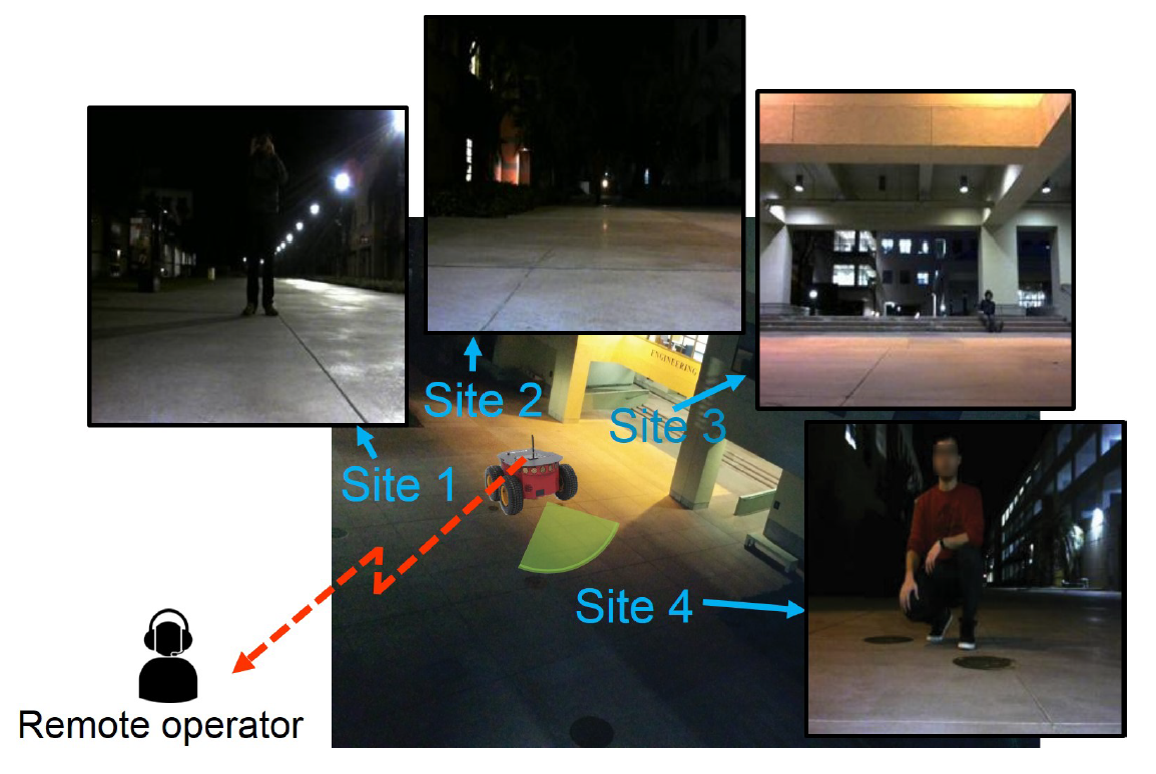

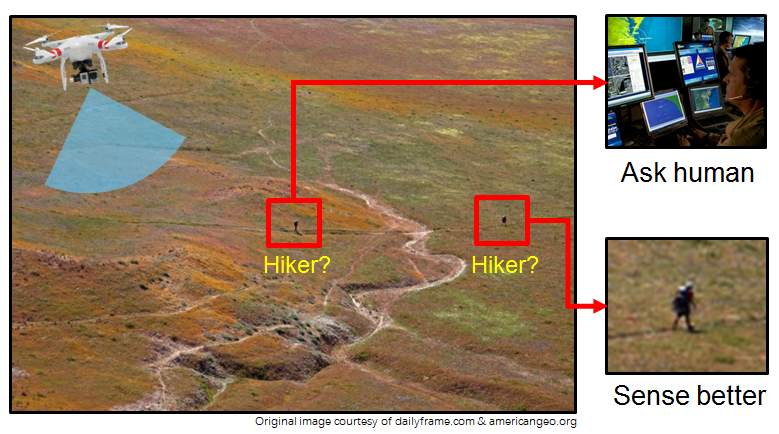

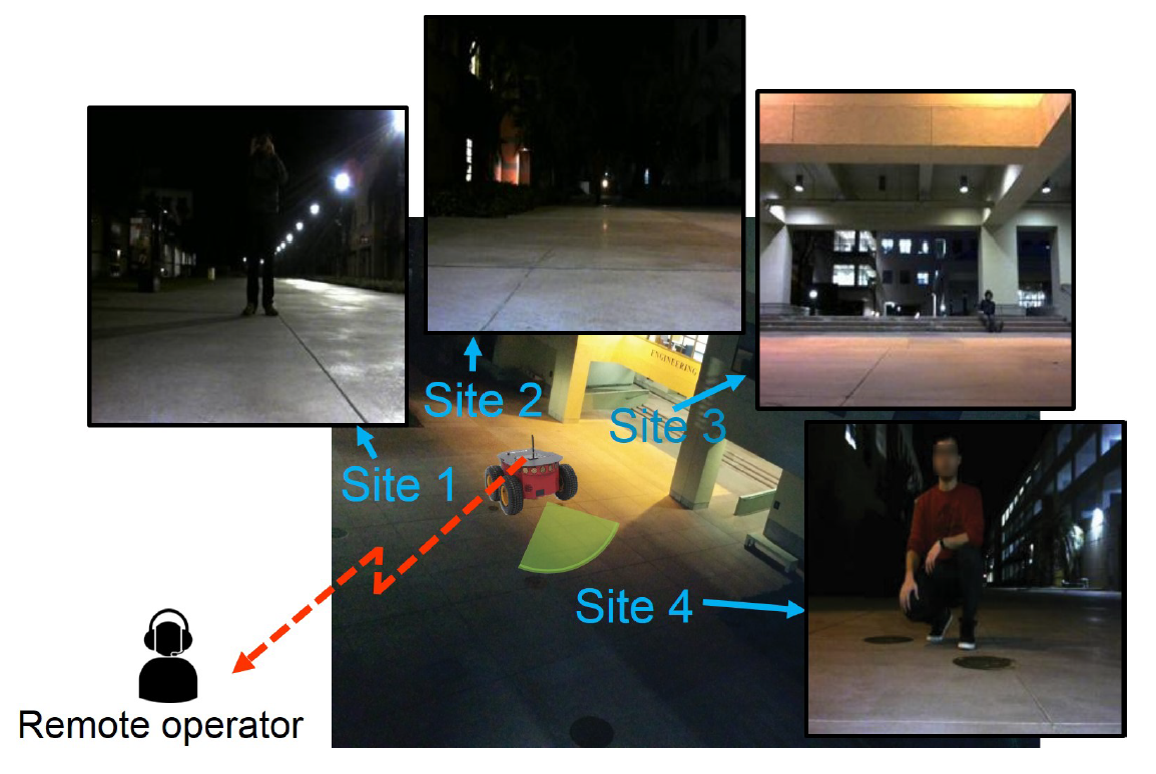

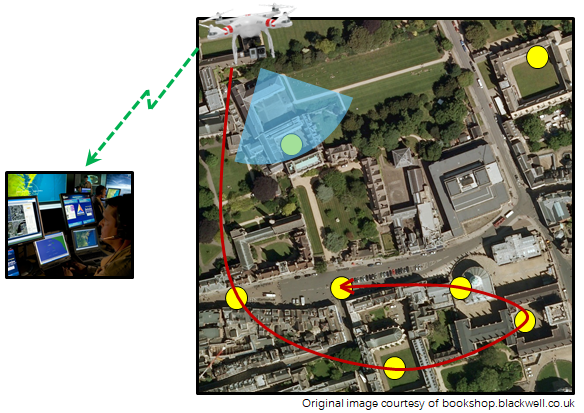

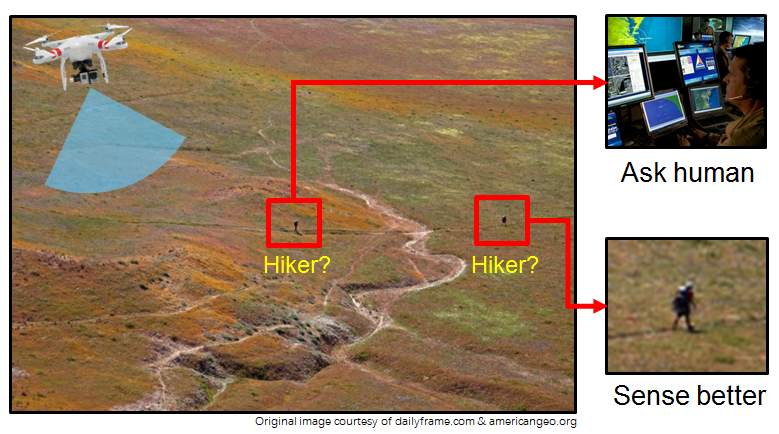

In this project, we design human-robot collaboration techniques by 1) predicting human task performance and response, and 2) incorporating such prediction into the optimization of robot field decision-making, sensing, and path planning. For instance, given a specific task, humans may not be able to provide a satisfactory performance if the task is too challenging (e.g., a visual search task where the captured image is extremely dark). Thus, in order to properly query humans, the robot needs to predict human performance.

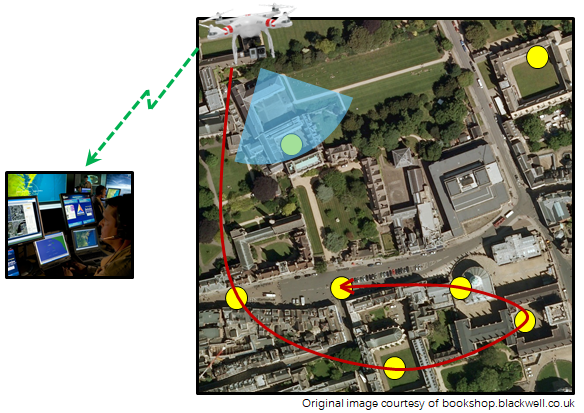

In this project, we show how to equip robots with the capability to predict human performance. More specifically, for visual tasks (e.g., visual searches), we propose a machine learning-based pipeline that can predict human visual performance probabilistically, for any visual input (RSS16 paper, project page, data/code). We have released the code and data for the learning pipeline, which is based on several MTurk data (RSS16 project website). Such predictions can then be utilized for the optimization of human-robot collaboration in several different applications, as we have shown with several experiments on our campus (RSS16 paper, ACC15 paper). In other parts of our project, we have then focused on the joint optimization of human collaboration and robotic field decision making (e.g., sensing, path planning, communication), under resource constraints. For instance, we have shown how robotic site visit and human collaboration can be co-optimized, and mathematically characterized the optimal solution ([TRO 2019][Book Chapter].

In [TRO 2020], we have shown how the robot can jointly classify objects, even under poor sensing quality, by deducing object similarity and utilizing it in its sensing, path planning and object classification. More specifically, even when the robot cannot classify individual objects due to poor sensing, we have shown that the correlation coefficient of its DNN feature vectors carries vital information on object similarity, which it can then utilize to deduce object similarity and jointly classify objects. Our campus experiments further confirm the proposed framework. Please see the papers below for more details.

H. Cai and Y. Mostofi, "Exploiting Object Similarity for Robotic Visual Recognition," IEEE Transactions on Robotics, July 2020.[pdf] [bibtex]

H. Cai and Y. Mostofi, "Human-Robot Collaborative Site Inspection under Resource Constraints," IEEE Transactions on Robotics, volume 35, issue 1, Feb. 2019.[pdf][bibtex]

E. Prashnani*, H. Cai*, Y. Mostofi, and P. Sen, "IQAPP: Image Quality Assessment through Pairwise Preference," in proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), to appear, June 2018.[pdf][bibtex] (*equal contribution)

H. Cai and Y. Mostofi, "When Human Visual Performance is Imperfect - How to Optimize the Collaboration between One Human Operator and Multiple Field Robots," Trends in Control and Decision-Making for Human-Robot Collaboration Systems (editor: Y. Wang), Springer, February 2017.[pdf][bibtex]

H. Cai and Y. Mostofi, "Asking for Help with the Right Question by Predicting Human Visual Performance," in proceedings of Robotics Science and Systems (RSS), June 2016.[pdf][bibtex]

H. Cai and Y. Mostofi, "A Human-Robot Collaborative Traveling Salesman Problem: Robotic Site Inspection with Human Assistance," in proceedings of the American Control Conference (ACC), July 2016.[pdf][bibtex]

H. Cai and Y. Mostofi, "To Ask or Not to Ask: A Foundation for the Optimization of Human-Robot Collaborations," in proceedings of American Control Conference (ACC), July 2015.[pdf][bibtex]

Arjun Muralidharan, Chitra Karanam and Saandeep Depatla for helping with the experiments.