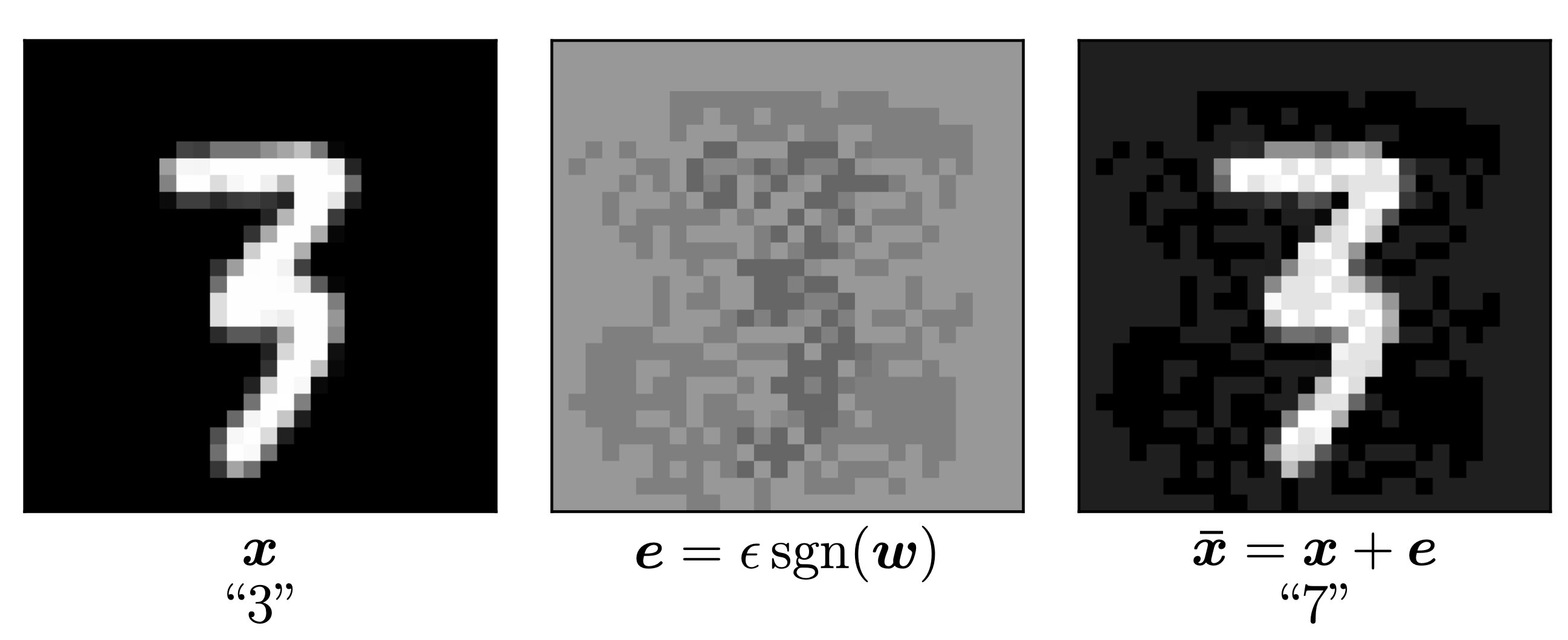

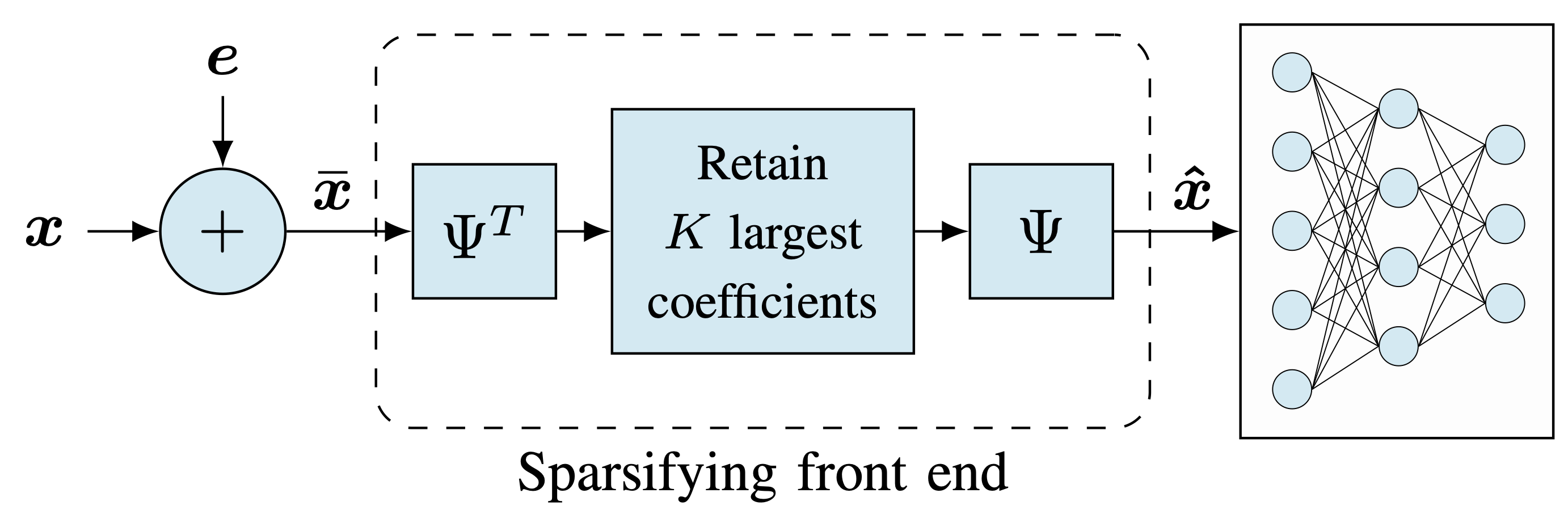

- Deep neural networks are vulnerable to small adversarial perturbations. We study a front end signal processing defense that exploits sparsity of natural data.

-

We show that sparsity-based defenses are provably effective for linear classifers, reducing the impact of $\ell_\infty$-bounded attacks by a factor of roughly K/N, where N is the data dimension and K is the sparsity level.

-

We then extend our approach to deep networks using locally linear modeling.

Publications

-

S. Gopalakrishnan, Z. Marzi, U. Madhow, R. Pedarsani, “ Robust Adversarial Learning via Sparsifying Front Ends", arXiv:1810.10625.

-

S. Gopalakrishnan, Z. Marzi, U. Madhow, R. Pedarsani, “ Combating Adversarial Attacks Using Sparse Representations", in International Conference on Learning Representations (ICLR) Workshop Track, Vancouver, 2018.

-

Z. Marzi, S. Gopalakrishnan, U. Madhow, R. Pedarsani, “ Sparsity-based Defense Against Adversarial Attacks on Linear Classifiers", in IEEE International Symposium on Information Theory (ISIT), Vail, 2018.