Menu:

Behrooz Parhami's Research Program

Page last updated on 2022 September 11

Professor Parhami has been involved in computer science and engineering research for five decades, beginning with the publication of a singly-authored paper in IEEE Trans. Computers in 1972 [PDF]. During this period, he has participated in many research endeavors and has published more than 300 technical papers in peer-reviewed journals and international conferences (see his list of publications).

His research projects entail aspects of computer design and architecture that are captured by the abbreviation PQRS, with each aspect defined by a set of keywords:

Performance, incorporates latency, speedup, throughput

Quality, embodies accuracy, parts/energy economy, stability

Reliability, encompasses correctness, timeliness, safety

Scalability, spans modularity, packageability, expandability

[Note that Professor Parhami's use of PQRS as a descriptor for his research program, since the early 2000s, predates Steven Spielberg's use in 2007 as the working title for a block-building computer game; telephone dials, however, have used PQRS for several decades now (Spielberg also has used LMNO elsewhere). PQRS should not be confused with SPQR (for "Senatus Populus Que Romanus"), or "The Senate and the People of Rome," a motto that appeared on ancient Rome's public buildings, military gear, armors, and coins. Given that Professor Parhami began with ABC at age five, he should be able to reach XYZ before retirement!]

[Note that Professor Parhami's use of PQRS as a descriptor for his research program, since the early 2000s, predates Steven Spielberg's use in 2007 as the working title for a block-building computer game; telephone dials, however, have used PQRS for several decades now (Spielberg also has used LMNO elsewhere). PQRS should not be confused with SPQR (for "Senatus Populus Que Romanus"), or "The Senate and the People of Rome," a motto that appeared on ancient Rome's public buildings, military gear, armors, and coins. Given that Professor Parhami began with ABC at age five, he should be able to reach XYZ before retirement!]

In recent years, the focus has somewhat expanded to also include TUVW, as defined below:

Testability, during design, implementation, and field use

Testability, during design, implementation, and field use

Usability, while requiring minimal expertise and training

Versatility, to allow mass production for economy of scale

Workability, a synonym for practicality in design and use

Within the rather broad field of computer design and architecture, Professor Parhami's active research interests fall within three subareas:

Computer Arithmetic, where he studies unconventional number representation schemes and has pioneered the discussion of generalized signed-digit number systems, along with associated encoding schemes and arithmetic algorithms, as a unified framework for dealing with redundant representations and finding optimal designs with a wide range of technologies including binary, multi-valued, and optical logic implementations. He also works on arithmetic error codes and table-based implementations of arithmetic functions. A recent area of focus has been hybrid analog-digital number representations in computing and in nature.

Parallel Processing, where he deals with scalability issues, interconnection architectures, and cost-effective designs for various application areas, including special-purpose search processors. His contributions in this area include the design of one of the first database processors, a widely referenced classification and survey of associative processor architectures, and, more recently, design of robust algorithms for meshes, proposal and evaluation of periodically regular chordal ring networks, unifying theories for interconnection networks, and a variety of hierarchical or multilevel interconnection networks.

Dependable Computing, also known as fault-tolerant computing, where he has contributed to the design of fault-tolerant associative processors, studied properties of arithmetic error codes, and proposed a systematic data-driven methodology for the design and evaluation of ultrareliable hardware/software systems and associated voting schemes. He also works on the design of robust parallel algorithms that exhibit graceful degradation even without redundant hardware resources. Professor Parhami's original multi-level model of dependable computing informs the structure of his graduate-level textbook on the topic.

Besides the aforementioned areas of current research interest, Professor Parhami was heavily involved in research on technology transfer and language-dependent issues in computer technology and applications until the late 1980s. Within this broader research agenda, he made numerous contributions to adapting information technology to the needs of Persian-language computing and user interfaces and is recognized as a pioneer in this area.

Research interests and projects in the areas above are outlined in the sections that follow. A description of Professor Parhami's past research contributions can be found elsewhere.

Research in Computer Arithmetic

In the following descriptions, selected items from B. Parhami's list of publications are provided in brackets.

Defining the Field

Arithmetic is a branch of mathematics that deals with numbers and numerical computation. Arithmetic operations on pairs of numbers x and y include addition (producing the sum s = x + y), subtraction (yielding the difference d = x − y), multiplication (resulting in the product p = x × y), and division (generating the quotient q = x ÷ y). Computer arithmetic deals with methods of representing integers (fixed-point numbers) and real values (e.g., floating-point numbers) in digital systems and efficient algorithms for manipulating such numbers by means of hardware circuits or software routines [203]. On the hardware side, various types of adders, subtractors, multipliers, dividers, square-rooters, and circuit techniques for function evaluation are considered [179] [259]. Both abstract structures and technology-specific designs are dealt with. Software aspects of computer arithmetic include complexity, error characteristics, stability, and certifiability of computational algorithms.

Areas of Work

Performance in many computer applications is critically dependent on the speed of arithmetic operations. The discovery, in the mid 1990s, of design flaws in the arithmetic circuits of Intel's Pentium processor aptly demonstrated the need for a more systematic approach to the design and verification of arithmetic algorithms and associated hardware designs, especially when they are extensively optimized for speed. Criteria other than speed are also becoming important in the design of algorithms and hardware for computer arithmetic. Examples include accuracy, high throughput, fault tolerance, certifiability, and low power consumption. Professor Parhami's research deals with all of the aspects above. Because at very high clock rates, carry propagation (even with the fastest carry networks) becomes a limiting factor for speed, a key area of Professor Parhami's research deals with redundant number representations and the associated carry-free arithmetic algorithms.

Current Threads

Redundant number representations [066] [086] [156] [196] [197] [200] [205] [219] [231] [242]

Systolic and on-line arithmetic [089] [187] [188] [189]

Configurable arithmetic arrays [140] [187]

VLSI-based and ASIC design [130] [136] [168] [194] [205] [206] [219] [226] [241] [250] [257] [ieeetc]

Theory and limits of computer arithmetic [050] [174] [189] [190] [198] [207]

Analysis and optimization of lookup table size [085] [142] [173]

Dependable and fault-tolerant arithmetic [201] [204] [228]

Residue arithmetic and conversion algorithms [092] [108] [129] [197] [200] [254] [258]

Research in Parallel Processing

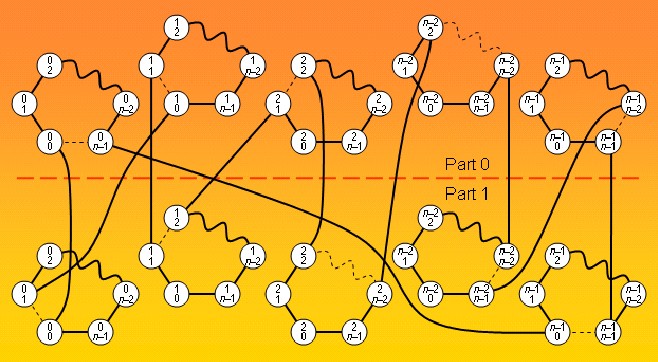

In the following descriptions, selected items from B. Parhami's list of publications are provided in brackets. The diagram below depicts a Hamiltonian cycle in a biswapped network, a particular 2-level hierarchical architecture, built of Hamiltonian components (based on joint work with Dr. Wenjun Xiao and others [235]).

Defining the Field

In the literal sense of the term, parallel processing, that is, using multiple processors and/or controllers to handle various tasks concurrently, is found in virtually every computer. Research in parallel processing, however, has a somewhat narrower focus: that of multiple processors or computers (nodes, for short) cooperating to solve a single computational problem with greater speed, throughput, cost-effectiveness, or reliability compared with any uniprocessor [162]. The nodes, and their communication mechanisms, can be homogeneous or heterogeneous. Control can be centralized or distributed. The programming model can entail a shared address space or explicit message passing. Memory access can be uniform or nonuniform in latency. Communication between processors can be direct (point-to-point) or indirect (multilevel switched). These variations, and their many possible combinations, are studied from the viewpoint of unifying models or theories, fundamental limits, distinguishing properties, and suitability to specific application domains. Both hardware design/construction issues and software/algorithm aspects are actively pursued.

Areas of Work

Parallel processing, once viewed as an exotic technology, is now a pervasive one. Small shared-memory multiprocessors are sprouting everywhere and large-scale multicomputers built of commodity components have become quite cost-effective. In the domain of massive parallelism, tens of thousands of processors already appear in some systems and multimillion-node supercomputers are being contemplated. With so many processors, optimization of processor design and its various interfaces, scalability of interconnects, and tolerance to processor or link failures are major considerations. These problems have received a lot of attention but much remains to be done. Professor Parhami's work centers on the interface between parallel architectures and algorithms. He studies the problem of algorithm design for general-purpose parallel computers and its "converse," the provision of architectural features in systems to help improve computational efficiency, economy, and reliability. These take the form of more efficient communication or fault tolerance mechanisms and, at the extreme, the design of algorithm-based special-purpose architectures. Professor Parhami is also involved in studying the theoretical foundations of large-scale and hierarchical interconnection networks (INs) for parallel processing.

Current Threads

INs based on algebraic structures [191] [208] [211] [215] [225] [227] [229] [233] [235] [236] [238] [239] [247] [249] [251] [253] [ipl]

INs based on number theory [137] [216] [217] [218] [220] [221] [230] [244] [245]

Complex networks [225] [234] [237] [246] [251] [256] [ijss]

Dependable and fault-tolerant parallel processing [192] [202] [232] [251] [jsc]

Multilevel networks for highly parallel systems [132] [141] [210] [222] [224] [235] [239] [251] [253] [ipl]

Data-driven control of processor arrays [130] [163] [168] [194] [206]

Parallel algorithms and complexity [090] [115] [138] [195] [212] [253]

Pruned or periodic interconnection networks [131] [151] [160] [169] [209] [211] [212] [230] [233] [236] [238] [244] [245]

Search processor design and associative processing [083] [115] [148]

Network packageability and VLSI layout [131] [159] [185] [186] [193]

Research in Dependable Computing

In the following descriptions, selected items from B. Parhami's list of publications are provided in brackets.

Defining the Field

Dependability concerns are integral parts of engineering design. Aspects and/or measures of dependability for computer systems include reliability, availability, performability (sustained computational capability), safety, security, testability, and maintainability. Ideally, we would like our computer systems to be perfect, always yielding timely and correct (or at least safe) results. However, just as bridges collapse and airplanes crash occasionally, so too computer hardware and software cannot be made totally immune to undesirable or unpredictable behavior. Despite great strides in component reliability and programming methodology, the exponentially increasing complexity of integrated circuits and software products makes the design of perfect computer systems nearly impossible. The field of dependable computing [096] [text] is concerned with studying the causes of imperfection in computer system (impairments to dependability), tolerance methods for ensuring correct, safe, or timely operation despite such impairments, and tools for evaluating the quality of proposed or implemented solutions.

Areas of Work

Methods for ensuring the uninterrupted and reliable functioning of digital computers in the presence of component faults and subsystem malfunctions are increasingly in demand as such systems expand in hardware and software complexity, perform a wider spectrum of critical functions, interact with a growing number of minimally trained users, and are expected to endure harsh or hostile operating environments. Thus, redundancy techniques, that were once limited to high-profile space and military projects, are becoming commonplace in run-of-the-mill computer systems. Unlike much work in the area of dependable computing, Professor Parhami's focus is not solely on hardware reliability or software certification. Rather, he views result correctness as the central theme. His work thus combines elements of hardware redundancy, architectural flexibility, and algorithm robustness to achieve result correctness and graceful performance degradation. Because system reconfiguration and elimination of single points of failure invariably involve the use of multiple processors, Professor Parhami's projects in dependable computing are intimately related to his research on parallel processing.

Current Threads

Dependable and fault-tolerant computer arithmetic [200] [201] [204] [228]

Robust algorithms and architectures [152] [166] [192] [228]

Fault tolerance of interconnection networks [183] [224] [239] [251]

Methodology and unifying concepts [096] [133] [150] [199]

Testing and fault tolerance for processor arrays [088] [122] [140]

Voting schemes and data fusion [075] [094] [101] [118] [138] [223] [232]

Broader Research Interests

Besides the three main focus areas of computer arithmetic, parallel processing, and dependable computing, Professor Parhami is engaged in research projects that cut across many subfields of computer engineering. In the following descriptions, selected items from B. Parhami's list of publications are provided in brackets.

Defining the Field

Engineering is the art of applying scientific knowledge to the construction of artifacts and processes that serve useful functions, while satisfying practical constraints on cost, usability, and reliability. Engineers are basically links between scientists and ordinary people. They adapt scientific theories for everyday use and also formulate societal needs and problems in a way that motivates scientists to study those problems and to develop relevant theories for them. Increasingly, engineers, especially those with advanced degrees, also act as scientists in developing and testing new theories themselves. This type of engineer is sometimes indistinguishable from an applied scientist. With the pretext above, computer engineering can be defined as a discipline dealing with the design, implementation, and application of computing systems, subject to constraints on computation speed, available resources (e.g., budget, chip area, power), and service quality. Computer engineers apply computer science theories to the solution of computational problems, combining their knowledge of the relevant theories with engineering judgment. They also contribute to the science of computation by developing new theories that facilitate the realization of dependable, cost-effective systems and/or help explain practical computational phenomena.

Areas of Work

Computer engineering is an exciting field that teems with interesting and challenging problems. From time to time, Professor Parhami studies wide-ranging issues in this field that are not in his primary areas of research focus. These studies are often triggered by his consulting work, teaching assignments (e.g., ideas that motivate the students or enhance the learning environment), and his sense of social responsibility as an educator/engineer/scientist. Many of these are one-time projects that are difficult to categorize. Aspects of these studies are continuations of Professor Parhami's early work on technology transfer and language-dependent considerations in computing (see B. Parhami's Research History).

Current Threads

Digital system design and architecture [165] [213] [eeee]

Role of computer engineering in society [149] [243]

Computer science and engineering education [248] [252] [255]

Professionalism and engineering ethics

Albert Einstein, on Creativity

The secret to creativity is knowing how to hide your sources.