Contact

- +1 805.893.5364

- info@ece.ucsb.edu

- Office Harold Frank Hall, Rm 4155

- Employment

- Visiting

Dept. Resources

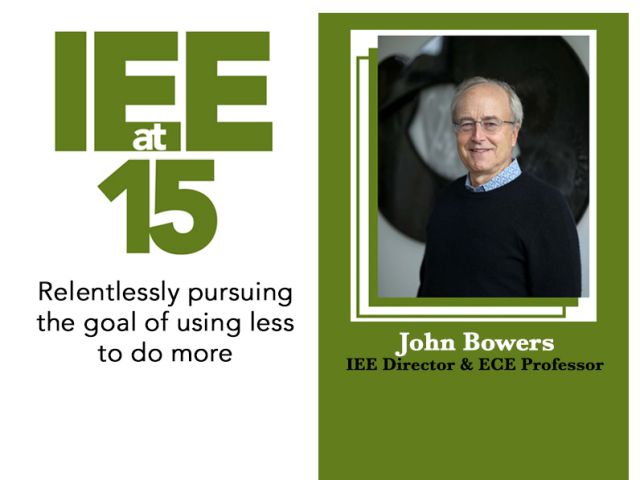

Department of Electrical and Computer Engineering

College of Engineering • UC Santa Barbara

2025 © Regents of the University of California